BibTeX

@inproceedings{RGCL2024Mei,

title = "Improving Hateful Meme Detection through Retrieval-Guided Contrastive Learning",

author = "Mei, Jingbiao and

Chen, Jinghong and

Lin, Weizhe and

Byrne, Bill and

Tomalin, Marcus",

editor = "Ku, Lun-Wei and

Martins, Andre and

Srikumar, Vivek",

booktitle = "Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = aug,

year = "2024",

address = "Bangkok, Thailand",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.acl-long.291",

doi = "10.18653/v1/2024.acl-long.291",

pages = "5333--5347",

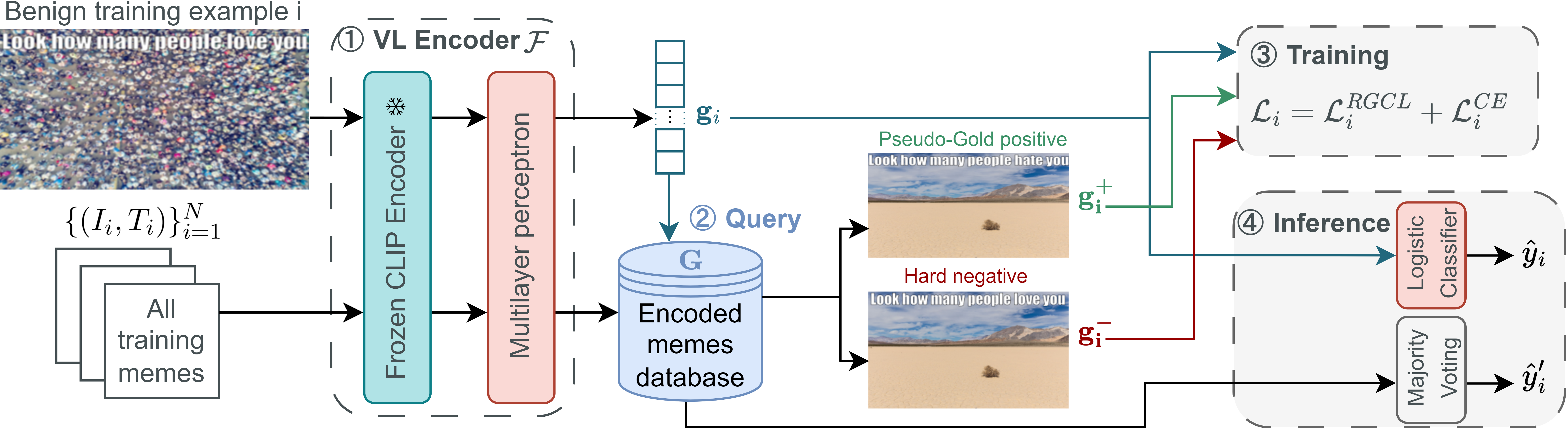

abstract = "Hateful memes have emerged as a significant concern on the Internet. Detecting hateful memes requires the system to jointly understand the visual and textual modalities. Our investigation reveals that the embedding space of existing CLIP-based systems lacks sensitivity to subtle differences in memes that are vital for correct hatefulness classification. We propose constructing a hatefulness-aware embedding space through retrieval-guided contrastive training. Our approach achieves state-of-the-art performance on the HatefulMemes dataset with an AUROC of 87.0, outperforming much larger fine-tuned large multimodal models. We demonstrate a retrieval-based hateful memes detection system, which is capable of identifying hatefulness based on data unseen in training. This allows developers to update the hateful memes detection system by simply adding new examples without retraining {---} a desirable feature for real services in the constantly evolving landscape of hateful memes on the Internet.",

}